1系统环境

硬件环境(Ascend/GPU/CPU): Ascend/GPU/CPU

MindSpore版本: 不限

执行模式(PyNative/ Graph): 不限

Python版本: Python=3.7.5

操作系统平台: Linux

2 报错信息

2.1问题描述

使用MindSpore中的mindspore.nn.Optimizer出现报错

2.2报错信息

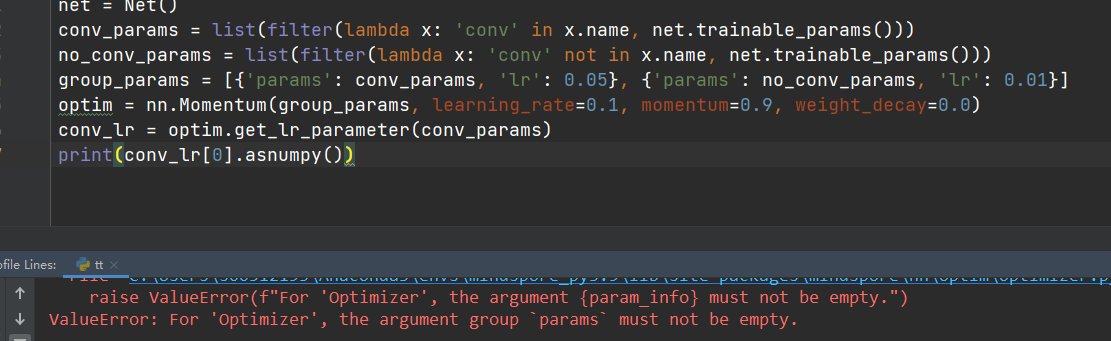

RuntimeError: For ’Optimizer’, the argument group params must not be empty.

2.3脚本代码(代码格式,可上传附件)

from mindspore import nn

net = Net()

conv_params = list(filter(lambda x: 'conv' in x.name, net.trainable_params()))

no_conv_params = list(filter(lambda x: 'conv' not in x.name, net.trainable_params()))

group_params = [{'params': conv_params, 'lr': 0.05},{'params': no_conv_params, 'lr': 0.01}]

optim = nn.Momentum(group_params, learning_rate=0.1, momentum=0.9, weight_decay=0.0)

conv_lr = optim.get_lr_parameter(conv_params)

print(conv_lr[0].asnumpy())

3 根因分析

**报错原因为:params里面不能为空,实例代码中的隐含条件是,Net应该是一个包含卷积操作的网络。本示例代码中没有展示网络定义。

以下代码,构造一个没包含params的Net,可以看到报错:

RuntimeError: For ’Optimizer’, the argument group params must not be empty.

import numpy as np

import mindspore.ops as ops

from mindspore import nn, Tensor, Parameter

class Net(nn.Cell):

def __init__(self):

super(Net, self).__init__()

self.matmul = ops.MatMul()

def construct(self, x):

out = self.matmul(x, x)

return out

net = Net()

conv_params = list(filter(lambda x: 'conv' in x.name, net.trainable_params()))

no_conv_params = list(filter(lambda x: 'conv' not in x.name, net.trainable_params()))

group_params = [{'params': conv_params, 'lr': 0.05}, {'params': no_conv_params, 'lr': 0.01}]

optim = nn.Momentum(group_params, learning_rate=0.1, momentum=0.9, weight_decay=0.0)

conv_lr = optim.get_lr_parameter(conv_params)

print(conv_lr[0].asnumpy())

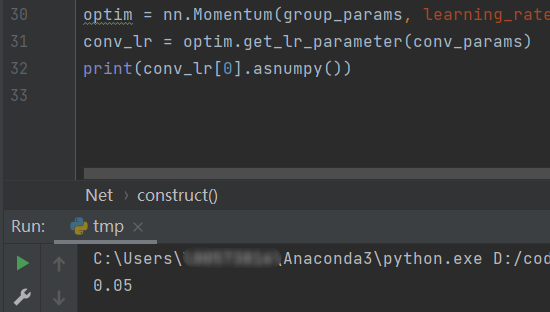

运行结果:

4 解决方案

修改代码如下,解决报错:

import numpy as np

import mindspore.ops as ops

from mindspore import nn, Tensor, Parameter

class Net(nn.Cell):

def __init__(self):

super(Net, self).__init__()

self.matmul = ops.MatMul()

self.conv = nn.Conv2d(1, 6, 5, pad_mode="valid")

self.param = Parameter(Tensor(np.array([1.0], np.float32)))

def construct(self, x):

x = self.conv(x)

x = x * self.param

out = self.matmul(x, x)

return out

net = Net()

conv_params = list(filter(lambda x: 'conv' in x.name, net.trainable_params()))

no_conv_params = list(filter(lambda x: 'conv' not in x.name, net.trainable_params()))

group_params = [{'params': conv_params, 'lr': 0.05}, {'params': no_conv_params, 'lr': 0.01}]

optim = nn.Momentum(group_params, learning_rate=0.1, momentum=0.9, weight_decay=0.0)

conv_lr = optim.get_lr_parameter(conv_params)

print(conv_lr[0].asnumpy())

代码运行结果: