1 系统环境

硬件环境(Ascend/GPU/CPU): GPU

MindSpore版本: mindspore=2.0

执行模式(PyNative/ Graph):不限

Python版本: Python=3.7

操作系统平台: 不限

2 报错信息

2.1 问题描述

执行以下代码会由于处理的数据过大抛出内存溢出的异常,不过奇怪的是pad运算可以正常执行,但是却在执行第10行print运算结果的时候报错,应该在执行第8行的时候就报错。

2.2 报错信息

[WARNING] PRE_ACT(505232,7efd06d50700,python):2023-07-14-16:49:38.341.247 [mindspore/ccsrc/backend/common/mem_reuse/mem_dynamic_allocator.cc:267] CalMemBlockAllocSize] Memory not enough: current free memory size[13904707584] is smaller than required size[17374927872].

[WARNING] PRE_ACT(505232,7efd06d50700,python):2023-07-14-16:49:38.341.300 [mindspore/ccsrc/backend/common/mem_reuse/mem_dynamic_allocator.cc:564] DumpDynamicMemPoolDebugInfo] Start dump dynamic memory pool debug info.

[WARNING] PRE_ACT(505232,7efd06d50700,python):2023-07-14-16:49:38.341.318 [mindspore/ccsrc/backend/common/mem_reuse/mem_dynamic_allocator.cc:523] operator()] Common mem all mem_block info: counts[2].

[WARNING] PRE_ACT(505232,7efd06d50700,python):2023-07-14-16:49:38.341.336 [mindspore/ccsrc/backend/common/mem_reuse/mem_dynamic_allocator.cc:527] operator()] MemBlock info: number[0] mem_buf_counts[4] base_address[0x7ef18c000000] block_size[8589934592].

[WARNING] PRE_ACT(505232,7efd06d50700,python):2023-07-14-16:49:38.341.359 [mindspore/ccsrc/backend/common/mem_reuse/mem_dynamic_allocator.cc:527] operator()] MemBlock info: number[1] mem_buf_counts[1] base_address[0x7ef408000000] block_size[1073741824].

[WARNING] PRE_ACT(505232,7efd06d50700,python):2023-07-14-16:49:38.341.376 [mindspore/ccsrc/backend/common/mem_reuse/mem_dynamic_allocator.cc:544] operator()] Common mem all idle mem_buf info: counts[2].

[WARNING] PRE_ACT(505232,7efd06d50700,python):2023-07-14-16:49:38.341.394 [mindspore/ccsrc/backend/common/mem_reuse/mem_dynamic_allocator.cc:554] operator()] Common mem total allocated memory[9663676416], used memory[7904168960], idle memory[1759507456].

[WARNING] PRE_ACT(505232,7efd06d50700,python):2023-07-14-16:49:38.341.410 [mindspore/ccsrc/backend/common/mem_reuse/mem_dynamic_allocator.cc:523] operator()] Persistent mem all mem_block info: counts[0].

[WARNING] PRE_ACT(505232,7efd06d50700,python):2023-07-14-16:49:38.341.426 [mindspore/ccsrc/backend/common/mem_reuse/mem_dynamic_allocator.cc:544] operator()] Persistent mem all idle mem_buf info: counts[0].

[WARNING] PRE_ACT(505232,7efd06d50700,python):2023-07-14-16:49:38.341.441 [mindspore/ccsrc/backend/common/mem_reuse/mem_dynamic_allocator.cc:554] operator()] Persistent mem total allocated memory[0], used memory[0], idle memory[0].

[WARNING] PRE_ACT(505232,7efd06d50700,python):2023-07-14-16:49:38.341.457 [mindspore/ccsrc/backend/common/mem_reuse/mem_dynamic_allocator.cc:567] DumpDynamicMemPoolDebugInfo] Finish dump dynamic memory pool debug info.

[ERROR] DEVICE(505232,7efd06d50700,python):2023-07-14-16:49:38.341.481 [mindspore/ccsrc/runtime/pynative/run_op_helper.cc:372] MallocForKernelOutput] Allocate output memory failed, node:Default/PadV3-op0

[WARNING] DEVICE(505232,7efd06d50700,python):2023-07-14-16:49:38.348.445 [mindspore/ccsrc/runtime/pynative/async/async_queue.cc:67] WorkerLoop] Run task failed, error msg:Malloc for kernel output failed, Memory isn't enough, node:Default/PadV3-op0

----------------------------------------------------

- C++ Call Stack: (For framework developers)

----------------------------------------------------

mindspore/ccsrc/runtime/pynative/run_op_helper.cc:643 LaunchKernels

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

/data1/myz/netsv/utils/Test.py in <module>

42 print("mindspore time: ", end_time - start_time)

43

---> 44 print(dead.shape)

45

46

~/miniconda3/lib/python3.7/site-packages/mindspore/common/_stub_tensor.py in shape(self)

88 if self.stub:

89 if not hasattr(self, "stub_shape"):

---> 90 self.stub_shape = self.stub.get_shape()

91 return self.stub_shape

92 return self.tensor.shape

RuntimeError: Malloc for kernel output failed, Memory isn't enough, node:Default/PadV3-op0

----------------------------------------------------

- C++ Call Stack: (For framework developers)

----------------------------------------------------

mindspore/ccsrc/runtime/pynative/run_op_helper.cc:643 LaunchKernels复制

2.3 脚本代码(代码格式,可上传附件)

import mindspore

import mindspore.ops as ops

mindspore.set_context(device_target="GPU", device_id=1)

np_tensor = np.random.randn(20, 999, 999, 99)

dead = mindspore.Tensor(np_tensor, mindspore.float32)

padding = (0, 99, 99, 0)

start_time = time.time()

dead = ops.pad(dead, padding, mode="constant", value=0)

end_time = time.time()

print("mindspore time: ", end_time - start_time)

print(dead.shape)

3 根因分析

tensor的shape过大,导致算子任意属性都打印不出来,不止shape

要继续往下走,可将shape改小

但只到print才报错,需要给算子提单,算子内部分析

4 解决方案

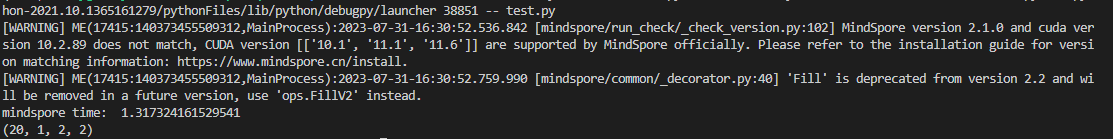

将shape修改小点,可正常print

import mindspore

import mindspore.ops as ops

import numpy as np

import time

mindspore.set_context(device_target="GPU", device_id=0)

mindspore.set_context(mode=mindspore.GRAPH_MODE)

np_tensor = np.random.randn(20, 1, 1, 1)

dead = mindspore.Tensor(np_tensor, mindspore.float32)

padding = (0, 1, 1, 0)

start_time = time.time()

dead = ops.pad(dead, padding, mode="constant", value=0)

end_time = time.time()

print("mindspore time: ", end_time - start_time)

print(dead.shape)