1 系统环境

硬件环境(Ascend/GPU/CPU): Ascend

MindSpore版本: 2.2

MF版本: r1.0

执行模式(PyNative/ Graph): 不限

2 报错信息

2.1 问题描述

在2机配置权重自动切分时候遇到如下报错。

2.2 报错信息

2023-09-22 01:57:56,963 - mindformers[utils.py:387] - TNFO -........Collecting strategy........

2023-09-22 01:57:56,970 - mindformers[utils.py:411] - WARNING - Can't collecting all strategy, device num > 8!

Rank 15: Waiting trans forming ckpt........

Rank 15: Transform succeed!

2023-09-22 03:57:57,751 - mindformers[utils.py:534] - INFO - ........Start load checkpoint from checkpoint........

2023-09-22 03:57:57,787 - mindformers[utils.py:242] - INFO - When distributed loads aresliced weights,load_ checkpoint should be a checkpoint directory containing thry of rank_{0-*},The directory structure is as follows: **chec kpoint_root_dir/checkpoint/ rank_{0-*}/**.ckpt

[WARNING] MD (75354, fffd2e7 fcle0,python) :2023-09-22-03:57:58.883.267 [mindspore/ccs rc/minddata/dataset/engine/perf/auto_tune.cc: 152] SaveAutotuneConfig] File: <./autdtune_15.json> already exists. File will be overwritten with the AutoTuned data pipeline configuration.

[WARNING] MD (75354, ffffaaf35c40,python) :2023-09-22-03:57 :58.885.382 [mindspore/ccsrc/minddata/dataset/engine/dat asetops/data queue op.cc: 115] ~DataQueue0p] preprocess_batch: 100;

|batch_queue: 1, 1, 1, 1, 1, 1, 1, 1, 1, 1;

push_start_time -> push_end_ time

Traceback (most recent call last):

File "wizardcoder/ run_wizardcoder.py", line 148, in <module>

device_id=args.device_id)

File "wizardcoder/run_wiza rdcoder.py", line 90, in main

task.finetune(finetune_checkpoin t=config.load_checkpoint, auto_t rans_ckpt=config.auto_trans_ckpt, resume=resume)

File "/home/wizardcoder/1_wizardcoder-mindformers-916/mindformers/t rainer/trainer.py", l ine 522, in finetune

is_full_config=True, **kwargs)

File "/home/wizardcoder/1_wizardcoder-mindformers -916/mindformers/trainer/causal_language_model ing/causal_language_modeling.py", line 106, in train

**kwargs)

File "/home/wizardcoder/1_wizardcoder-mindforme rs-916/mindformers/trainer/base_t rainer.py", line 616, in training_process

transform_and_load_checkpoint (config, model, network, dataset)

File "/home/wizardcoder/1_wizardcoder-mindformers-916/mindformers/t rainer/utils.py", line 309, in transform_and_load_checkpoint

load_ckpt(config, network, optimizer=optimizer)

File "/home/wizardcoder/1_ wizardcoder-mindformers-916/mindformers/trainer/utils .py", line 536, in load ckpt

checkpoint dict = load distributed checkpoint (config)

File "/home/wizardcoder/1_wlzardcoder-mindformers-916/mindformers/trainer/utils.py", line 247, in load_dis tributed_checkpoint

distribute checkpoint path = get_last_checkpoint(distribute_ checkpoint_dir)

File "/home/wizardcoder/1_wizardcoder-mindformers -916/mindformers/trainer/utils.py", line 552, in get_last_checkpoint

f"{chockpoint_dir} is not a real directory,

NotADirectoryError: ./output/transformed chec kpoint/rank_15 is not a real directory,When distributed loads are sliced weights , load_checkpoint should be a checkpoint containing the directory of rank {0-*},The directory structure is as follows: **checkpoint root dir/rank {0-* }/checkpoint/**.ckpt

3 根因分析

因为每台机器只会生成自己的strategy文件,而看不到其它机器的strategy文件,导致后续自动切分时缺少全部的strategy文件而切分失败。

4 解决方案

因此在多机并行时,只能采用离线切分的方法。具体操作如下:

- 每台机器各自生成自身的

strategy文件,只需要配置only_save_strategy为True即可,此时auto_trans_ckpt不起作用,不会自动切分权重。

- 将2台机器的strategy文件统一汇总到一台机器的一个文件夹下(假设文件夹名称为/home/strategy/),再在这台机器执行图中的权重切分脚本。

注意:初始权重是完整网络权重,所以不需要写入–src_ckpt_strategy,所以正确的脚本启动语句如下:(–src_ckpt_dir是完整脚本的路径,里面还有一层rank_0文件夹,其中存放完整ckpt文件;–dst_ckpt_dir存放切分后的模型)

python mindformers/tools/transform_ckpt.py --dst_ckpt_strategy /home/strategy/ --src_ckpt_dir

/home/mindspore_models/ --dst_ckpt_dir /home/distribute_models/

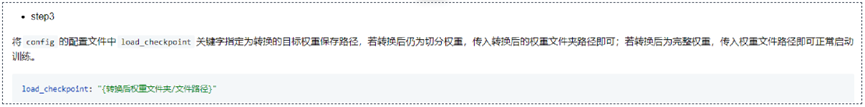

- 切好模型后,将模型传给另一台机器中,保证2台机器都有切分后的模型之后,配置

yaml文件的load_checkpoint为上述dst_ckpt_dir文件夹位置,此时auto_trans_ckpt一直保持为False。