1 系统环境

硬件环境(Ascend/GPU/CPU): Ascend910

MindSpore版本: mindspore=1.10.1

执行模式(PyNative/ Graph):Graph

Python版本: Python=3.9.13

操作系统平台: 不限

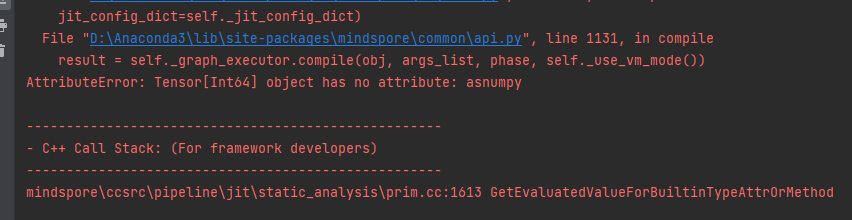

2 报错信息

2.1 问题描述

在图模式+Ascend下h_s = int(H_start[idx_H].asnumpy())报错AttributeError: Tensor[Int64] object has no attribute: asnumpy。

具体环境为mindspore-acsend=1.10.1,硬件环境为acsend 910系列

2.2 脚本代码

import mindspore.numpy as np

from mindspore import dtype as mstype

from mindspore import ops, Tensor, context, nn

class AdaptiveAvgPool2d(nn.Cell):

def __init__(self, output_size):

"""Initialize AdaptiveAvgPool2d."""

super(AdaptiveAvgPool2d, self).__init__()

self.output_size = output_size

def adaptive_avgpool2d(self, inputs):

""" NCHW """

H = self.output_size[0]

W = self.output_size[1]

H_start = ops.Cast()(np.arange(start=0, stop=H, dtype=mstype.float32) * (inputs.shape[-2] / H), mstype.int64)

H_end = ops.Cast()(np.ceil(((np.arange(start=0, stop=H, dtype=mstype.float32)+1) * (inputs.shape[-2] / H))), mstype.int64)

W_start = ops.Cast()(np.arange(start=0, stop=W, dtype=mstype.float32) * (inputs.shape[-1] / W), mstype.int64)

W_end = ops.Cast()(np.ceil(((np.arange(start=0, stop=W, dtype=mstype.float32)+1) * (inputs.shape[-1] / W))), mstype.int64)

pooled2 = []

for idx_H in range(H):

pooled1 = []

for idx_W in range(W):

h_s = int(H_start[idx_H].asnumpy())

h_e = int(H_end[idx_H].asnumpy())

w_s = int(W_start[idx_W].asnumpy())

w_e = int(W_end[idx_W].asnumpy())

res = inputs[:, :, h_s:h_e, w_s:w_e]

pooled1.append(ops.ReduceMean(keep_dims=True)(res, (-2,-1)))

pooled1 = ops.Concat(-1)(pooled1)

pooled2.append(pooled1)

pooled2 = ops.Concat(-2)(pooled2)

return pooled2

def construct(self, x):

x = self.adaptive_avgpool2d(x)

return x

a=AdaptiveAvgPool2d((3,3))

x=Tensor(np.randn(3,12,12,12))

print(a(x))

3 根因分析

图模式下asnumpy()的操作不支持, 使用最新2.0以上版本就可以在图模式下支持asnumpy()了.

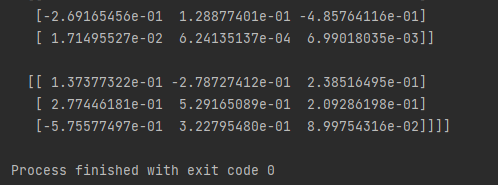

4 解决方案

从代码上看,H_start 没必要从numpy 转Tensor再转成numpy, 所以去掉转换的过程.

import mindspore.numpy as np

from mindspore import dtype as mstype

from mindspore import ops, Tensor, context, nn

class AdaptiveAvgPool2d(nn.Cell):

def __init__(self, output_size):

super(AdaptiveAvgPool2d, self).__init__()

self.output_size = output_size

def adaptive_avgpool2d(self, inputs):

""" NCHW """

H = self.output_size[0]

W = self.output_size[1]

H_start = np.arange(start=0, stop=H, dtype=mstype.float32) * (inputs.shape[-2] / H)

H_end = np.ceil(((np.arange(start=0, stop=H, dtype=mstype.float32) + 1) * (inputs.shape[-2] / H)))

W_start = np.arange(start=0, stop=W, dtype=mstype.float32) * (inputs.shape[-1] / W)

W_end = np.ceil(((np.arange(start=0, stop=W, dtype=mstype.float32) + 1) * (inputs.shape[-1] / W)))

H_start = H_start.astype(np.int64)

H_end= H_end.astype(np.int64)

W_start= W_start.astype(np.int64)

W_end= W_end.astype(np.int64)

pooled2 = []

for idx_H in range(H):

pooled1 = []

for idx_W in range(W):

h_s = H_start[idx_H]

h_e = H_end[idx_H]

w_s = W_start[idx_W]

w_e = W_end[idx_W]

res = inputs[:, :, h_s:h_e, w_s:w_e]

pooled1.append(ops.ReduceMean(keep_dims=True)(res, (-2,-1)))

pooled1 = ops.Concat(-1)(pooled1)

pooled2.append(pooled1)

pooled2 = ops.Concat(-2)(pooled2)

return pooled2

def construct(self, x):

x = self.adaptive_avgpool2d(x)

return x

a=AdaptiveAvgPool2d((3,3))

x=Tensor(np.randn(3,12,12,12))

print(a(x))