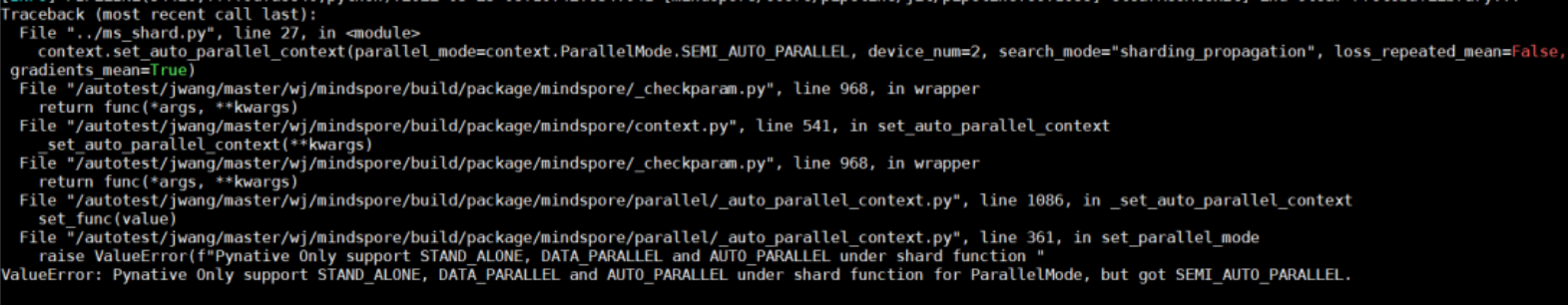

PyNative模式下配置为SEMI_AUTO_PARALLEL模式,遇到报错PyNative Only support STAND_ALONE, DATA_PARALLEL and AUTO_PARALLEL under shard function for ParallelMode, but got SEMI_AUTO_PARALLEL。

1. 系统环境

Hardware Environment(Ascend/GPU/CPU): Ascend

Software Environment:

MindSpore version (source or binary): 1.6.1

Python version (e.g., Python 3.7.5): 3.7.6

OS platform and distribution (e.g., Linux Ubuntu 16.04):

GCC/Compiler version (if compiled from source):

2. 脚本

启动脚本如下,

ulimit -u unlimited

ulimit -SHn 65535

export DEVICE_NUM=$1

export RANK_SIZE=$1

RANK_TABLE_FILE=$(realpath $2)

export RANK_TABLE_FILE

echo "RANK_TABLE_FILE=${RANK_TABLE_FILE}"

export SERVER_ID=0

rank_start=$((DEVICE_NUM * SERVER_ID))

for((i=0; i<$1; i++));

do

export DEVICE_ID=$i

export RANK_ID=$((rank_start + i))

rm -rf ./train_parallel$i

mkdir ./train_parallel$i

echo "start training for rank $RANK_ID, device $DEVICE_ID"

cd ./train_parallel$i ||exit

env > env.log

python ../test.py > log 2>&1 &

cd ..

done

test.py文件内容如下

import mindspore.dataset as ds

import mindspore.communication.management as D

from mindspore.train.callback import LossMonitor

from mindspore.train.callback import ModelCheckpoint

from mindspore.common.initializer import initializer

step_per_epoch = 4

def get_dataset(*inputs):

def generate():

for _ in range(step_per_epoch):

yield inputs

return generate

class Net(Cell):

"""define net"""

def __init__(self):

super().__init__()

self.matmul = P.MatMul().shard(((2, 4), (4, 1)))

self.weight = Parameter(initializer("normal", [32, 16]), "w1")

self.relu = P.ReLU().shard(((8, 1),))

def construct(self, x):

out = self.matmul(x, self.weight)

out = self.relu(out)

return out

if __name__ == "__main__":

context.set_context(mode=context.PYNATIVE_MODE, device_target="Ascend", save_graphs=True)

D.init()

rank = D.get_rank()

context.set_auto_parallel_context(parallel_mode="semi_auto_parallel", device_num=8, full_batch=True)

np.random.seed(1)

input_data = np.random.rand(16, 32).astype(np.float32)

label_data = np.random.rand(16, 16).astype(np.float32)

fake_dataset = get_dataset(input_data, label_data)

net = Net()

callback = [LossMonitor(), ModelCheckpoint(directory="{}".format(rank))]

dataset = ds.GeneratorDataset(fake_dataset, ["input", "label"])

loss = SoftmaxCrossEntropyWithLogits()

learning_rate = 0.001

momentum = 0.1

epoch_size = 1

opt = Momentum(net.trainable_params(), learning_rate, momentum)

model = Model(net, loss_fn=loss, optimizer=opt)

model.train(epoch_size, dataset, callbacks=callback, dataset_sink_mode=False)

3. 报错信息

4. 报错分析

在PyNative模式下面,指定context.set_auto_parallel_context(parallel_mode=“semi_auto_parallel”)会遇到报错

原因是现在自动并行是图编译中的一个对于图的优化流程,而PyNative模式下面并不会走相同的优化,无法进入到此流程,所以在前端进行了校验,即PyNative模式不支持semi_auto_parallel。

如果想在PyNative模式下面使用自动并行的能力,可以参考https://mindspore.cn/docs/programming_guide/zh-CN/master/pynative_shard_function_parallel.html ,在auto_parallel模式下使用shard函数,此函数可以指定某一部分以图模式执行,并且其内部可以进行算子级别的模型并行。