PositionalEncoding 这个类的定义好像没有附上

def init(init_type, shape, dtype, name, requires_grad):

"""Init."""

initial = initializer(init_type, shape, dtype).init_data()

return Parameter(initial, name=name, requires_grad=requires_grad)

class PositionalEncoding(nn.Cell):

"""位置编码"""

# num + hidden:向量长度 max_len:序列最大长度

def __init__(self, num_hidden, dropout, max_len=10000):

super(PositionalEncoding, self).__init__()

self.dropout = nn.Dropout(p=dropout)

# 创建一个足够长的P : (1, 1000, 32)

self.P = ms.ops.zeros((1, max_len, num_hidden))

# 本例中X的维度为(1000, 16)

X = ms.ops.arange(max_len, dtype=ms.float32).reshape(

-1, 1) / ms.ops.pow(10000, ms.ops.arange(0, num_hidden, 2, dtype=ms.float32) / num_hidden)

self.P[:, :, 0::2] = ms.ops.sin(X)

self.P[:, :, 1::2] = ms.ops.cos(X)

def construct(self, x: ms.Tensor) -> ms.Tensor:

x = x + self.P[:, :x.shape[1], :]

return self.dropout(x)

怎么样?可以转换成功么?

我这边在导出时,发现 nn.TransformerEncoder 封装类中,如果输入数据是带有变长维度,会在导出的mindir图中带有控制流算子,可能是因此导致mindspore lite转换报错。可以按照以下步骤,确认下您那边导出的mindir图中是否带有控制流算子。

- 在mindspore 导出脚本中,添加一行,用于保存前项图

ms.context.set_context(save_graphs=True, save_graphs_path=“./graph”)

- 执行以下命令,将mindspore导出的mindir 文件,转换为可视化的文本文件。执行完成后,会在当前目录下生成load_0000.ir 文件

python view.py -m flow_cnn_res_model.mindir

view.py 脚本内容:

import argparse

from mindspore import load, context

context.set_context(save_graphs = 2)

if __name__ == '__main__':

parser = argparse.ArgumentParser(description="Dump MindIR to ir text")

parser.add_argument("-m", "--model", required=True, nargs=1, type=str, help="MindIR file to be converted to .ir")

args = parser.parse_args()

source_file = args.model[0]

load(source_file)

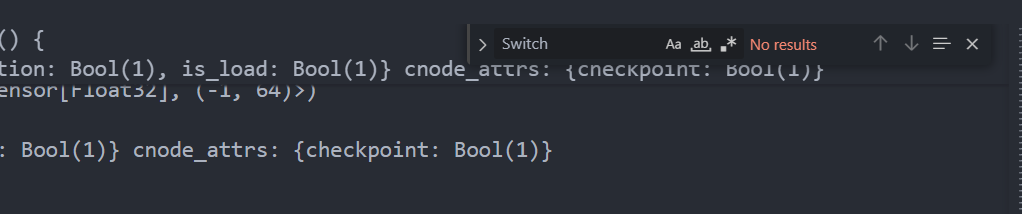

- 在导出的 load_0000.ir 文件中,搜索关键字 “Switch”,记录CNode 名,用于在步骤1导出前项图中继续搜索。如以下实例中,记录CNode_5805。

> grep 'Switch' load_0000.ir

%29(CNode_5805) = Switch(%26, %27, %28) primitive_attrs: {is_load: Bool(1)}

- 在步骤1导出的前项图中搜索步骤3获得的CNode_5805,可在对应文件中,得到引入Switch 算子的代码位置。

> grep -r 'CNode_5805' graph/

graph/opt_pass_27_assign_add_opt_0484.ir: %29(CNode_5805) = Switch(%26, %27, %28)

graph/opt_pass_22_label_micro_interleaved_index_0479.ir: %29(CNode_5805) = Switch(%26, %27, %28)

graph/opt_pass_35_interleave_parallel_branches_0492.ir: %29(CNode_5805) = Switch(%26, %27, %28)

graph/opt_pass_38_control_data_broadcast_order_0495.ir: %29(CNode_5805) = Switch(%26, %27, %28)

....

如上所示,在grep结果的第一个文件中,搜索CNode_5805,可以看到引入Switch 算子的代码位置:return self.encoder(seq, src_key_padding_mask=mask)

%29(CNode_5805) = Switch(%26, %27, %28)

: (<Bool, NoShape>, <Func, NoShape>, <Func, NoShape>) -> (<Func, NoShape>)

# Scope: (Default/encoder-SeqEncoder/encoder-TransformerEncoder/layers-CellList/0-TransformerEncoderLayer/self_attn-MultiheadAttention)

# In file /home/chenyh/models/bbs/1030/export.py:86~91, 4~32/ def construct(self, seq, seq_attention_mask, mask):/

# In file /home/chenyh/models/bbs/1030/export.py:90, 18~30/ feature = self.encoder(seq, seq_attention_mask, mask)[:, 0, :]/

# In file /home/chenyh/models/bbs/1030/export.py:54~77, 4~59/ def construct(self, seq, seq_attention_mask, mask):/

# In file /home/chenyh/models/bbs/1030/export.py:77, 15~27/ return self.encoder(seq, src_key_padding_mask=mask)/

# In file /home/chenyh/miniconda3/envs/ms-py39/lib/python3.9/site-packages/mindspore/nn/layer/transformer.py:626~640, 4~21/ def construct(self, src: Tensor, src_mask: Optional[Tensor] = None, src_key_padding_mask: Optional[Tensor] = None):/

# In file /home/chenyh/miniconda3/envs/ms-py39/lib/python3.9/site-packages/mindspore/nn/layer/transformer.py:634, 19~30/ for mod in self.layers:/

# In file /home/chenyh/miniconda3/envs/ms-py39/lib/python3.9/site-packages/mindspore/nn/layer/transformer.py:366~390, 4~25/ def construct(self, src: Tensor, src_mask: Optional[Tensor] = None,/

# In file /home/chenyh/miniconda3/envs/ms-py39/lib/python3.9/site-packages/mindspore/nn/layer/transformer.py:384, 30~44/ sa_block_result = self._sa_block(input_data, src_mask, src_key_padding_mask)/

# In file /home/chenyh/miniconda3/envs/ms-py39/lib/python3.9/site-packages/mindspore/nn/layer/transformer.py:392~397, 4~31/ def _sa_block(self, x, attn_mask, key_padding_mask):/

# In file /home/chenyh/miniconda3/envs/ms-py39/lib/python3.9/site-packages/mindspore/nn/layer/transformer.py:393, 12~26/ x = self.self_attn(x, x, x,/

# In file /home/chenyh/miniconda3/envs/ms-py39/lib/python3.9/site-packages/mindspore/nn/layer/transformer.py:200~247, 4~29/ def construct(self, query: Tensor, key: Tensor, value: Tensor, key_padding_mask: Optional[Tensor] = None,/

# In file /home/chenyh/miniconda3/envs/ms-py39/lib/python3.9/site-packages/mindspore/nn/layer/transformer.py:233, 47~96/ attn_output, attn_output_weights = ops.function.nn_func.multi_head_attention_forward(/

# In file /home/chenyh/miniconda3/envs/ms-py39/lib/python3.9/site-packages/mindspore/ops/function/nn_func.py:8345~8520/def multi_head_attention_forward(query, key, value, embed_dim_to_check, num_heads, in_proj_weight,/

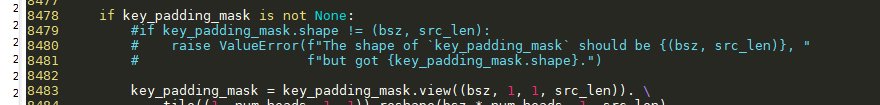

# In file /home/chenyh/miniconda3/envs/ms-py39/lib/python3.9/site-packages/mindspore/ops/function/nn_func.py:8479~8481, 8~66/ if key_padding_mask.shape != (bsz, src_len):/

已经按上述方法搜索了,带有Switch算子

# In file C:\Users\04000387\AppData\Local\Programs\Python\Python311\Lib\site-packages\mindspore\nn\layer\transformer.py:626~640, 4~21/ def construct(self, src: Tensor, src_mask: Optional[Tensor] = None, src_key_padding_mask: Optional[Tensor] = None):/

# In file C:\Users\04000387\AppData\Local\Programs\Python\Python311\Lib\site-packages\mindspore\nn\layer\transformer.py:634, 19~30/ for mod in self.layers:/

# In file C:\Users\04000387\AppData\Local\Programs\Python\Python311\Lib\site-packages\mindspore\nn\layer\transformer.py:366~390, 4~25/ def construct(self, src: Tensor, src_mask: Optional[Tensor] = None,/

# In file C:\Users\04000387\AppData\Local\Programs\Python\Python311\Lib\site-packages\mindspore\nn\layer\transformer.py:384, 30~44/ sa_block_result = self._sa_block(input_data, src_mask, src_key_padding_mask)/

# In file C:\Users\04000387\AppData\Local\Programs\Python\Python311\Lib\site-packages\mindspore\nn\layer\transformer.py:392~397, 4~31/ def _sa_block(self, x, attn_mask, key_padding_mask):/

# In file C:\Users\04000387\AppData\Local\Programs\Python\Python311\Lib\site-packages\mindspore\nn\layer\transformer.py:393, 12~26/ x = self.self_attn(x, x, x,/

# In file C:\Users\04000387\AppData\Local\Programs\Python\Python311\Lib\site-packages\mindspore\nn\layer\transformer.py:200~247, 4~29/ def construct(self, query: Tensor, key: Tensor, value: Tensor, key_padding_mask: Optional[Tensor] = None,/

# In file C:\Users\04000387\AppData\Local\Programs\Python\Python311\Lib\site-packages\mindspore\nn\layer\transformer.py:233, 47~96/ attn_output, attn_output_weights = ops.function.nn_func.multi_head_attention_forward(/

# In file C:\Users\04000387\AppData\Local\Programs\Python\Python311\Lib\site-packages\mindspore\ops\function\nn_func.py:8568~8743/def multi_head_attention_forward(query, key, value, embed_dim_to_check, num_heads, in_proj_weight,/

# In file C:\Users\04000387\AppData\Local\Programs\Python\Python311\Lib\site-packages\mindspore\ops\function\nn_func.py:8607~8608, 8~101/ if key.shape != value.shape:/

%8(CNode_5062) = Switch(%3, %4, %7)

: (<Bool, NoShape>, <Func, NoShape>, <Func, NoShape>) -> (<Func, NoShape>)

: (V:s9, <null>, <null>) -> (S:[])

# Fullname with scope: (Default/feature-FlowBaseCNNResModel/seq_encoder-SeqEncoder/encoder-TransformerEncoder/layers-CellList/0-TransformerEncoderLayer/self_attn-MultiheadAttention/Switch-op93)

# In file D:\git\flow-ai\flowaims\models\cnn_res_model.py:158~160, 4~32/ def construct(self, flow, seq, seq_attention_mask):/

# In file D:\git\flow-ai\flowaims\models\cnn_res_model.py:159, 18~30/ feature = self.feature(flow, seq, seq_attention_mask)/

# In file D:\git\flow-ai\flowaims\models\cnn_res_model.py:129~136, 4~35/ def construct(self, flow, seq, seq_attention_mask):/

# In file D:\git\flow-ai\flowaims\models\cnn_res_model.py:131, 14~30/ seq = self.seq_encoder(seq, seq_attention_mask)[:, 0, :]/

# In file D:\git\flow-ai\flowaims\models\unit_model.py:66~76, 4~73/ def construct(self, seq, seq_attention_mask):/

# In file D:\git\flow-ai\flowaims\models\unit_model.py:76, 15~27/ return self.encoder(seq, src_key_padding_mask=seq_attention_mask)/

这个问题怎么解决呢?

参考上面方法找到Switch算子的引入堆栈,可以在最底层找到if算子引入的地方,可以看到我的样例里是mindspore/ops/function/nn_func.py :8479~8481 引入。找到对应的文件可以看到是个输入shape的异常判断,导致引入了Switch算子。注释掉该异常判断,不影响模型推理结构。可以注释掉后尝试重新导出前项mindspore模型并用lite convert转换。

不行,没有Switch算子还是报这个错误

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.414.476 [mindspore-lite/tools/converter/import/convert_extend_ops/dense.cc:85] ConvertDensePass] "Dense got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.415.663 [mindspore-lite/tools/converter/import/convert_extend_ops/dense.cc:85] ConvertDensePass] "Dense got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.416.145 [mindspore-lite/tools/converter/import/convert_extend_ops/dense.cc:85] ConvertDensePass] "Dense got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.417.264 [mindspore-lite/tools/converter/import/convert_extend_ops/dense.cc:85] ConvertDensePass] "Dense got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.417.303 [mindspore-lite/tools/converter/import/convert_extend_ops/dense.cc:85] ConvertDensePass] "Dense got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.417.895 [mindspore-lite/tools/converter/import/convert_extend_ops/dense.cc:85] ConvertDensePass] "Dense got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.420.824 [mindspore-lite/tools/converter/import/convert_extend_ops/dense.cc:85] ConvertDensePass] "Dense got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.421.127 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.422.146 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.422.863 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.423.016 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.424.307 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.424.634 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.425.858 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.426.105 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.426.257 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.426.382 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.427.647 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.427.966 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.429.152 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.429.474 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.429.598 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.430.413 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.431.418 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

[ERROR] LITE(178150,ffff9a09d020,converter_lite):2025-11-06-10:03:46.431.624 [mindspore-lite/tools/converter/import/convert_extend_ops/matmul_ext.cc:186] ConvertMatMulExtPass] "MatMulExt got dynamic shape."

看报错,应该是mindspore动态图,导出的mindir包含mint类算子,当前MindSpore Lite部分mint算子支持,部分算子不支持,当前在开源社区已经有相关的rfc在讨论适配中了(【RFC】希望lite转换能接入mindspore的mint接口 · Issue #ICXG92 · MindSpore/mindspore-lite - Gitee.com

当前针对mint类算子,只能通过ops的方式调用,

是否和我使用动态图模式训练有关?我采用静态图训练是否可以避免该问题?

是的,在解决上面的控制流算子之后,只需要将图中的部分算子的写法替换一下即可,,不需要重新训练,换一下写法,重新导出mindir即可。